Concept

This project explores an immersive interaction system for autonomous vehicles that transforms passengers from passive riders into active participants. By combining voice commands, hand gestures, and augmented reality, the vehicle’s windows and windshield become dynamic digital surfaces that render a 360-degree visual environment. The system allows passengers to interact with information, entertainment, and surroundings in intuitive, spatial ways—redefining the in-car experience beyond traditional screens and touch-based interfaces.

Immersive VR Cabin

Reimagining Automotive Experience

Timeline

Sep - Dec 2024

Team

Juni Kim, Ennis Zhuang

Role

Co-designer & developer

Platforms

AR / VR, Unity

Problem

As vehicles become increasingly autonomous, passengers lose the role of “driver” but gain little in terms of meaningful interaction. Current in-car systems rely heavily on flat touchscreens that feel limiting, distracting, and disconnected from the surrounding environment. This creates a passive, screen-bound experience that fails to take advantage of the vehicle’s physical space or the passenger’s full range of interaction.

Pain Points

Design Challenge

Leading Question

How might we incorporate VR technology into autonomous cars to make an immersive experience?

VR creates a new environment that integrates reality into the virtual world, bringing a whole new experience instead of staring at multiple screens in a virtual world.

Conclusion

We wanted to design an infotainment system for leisure vehicles that had a little fun added to the traditional automobile UI. We wanted to add an AR feature so that passengers could have a more immersive experience within the car.

Features

Design

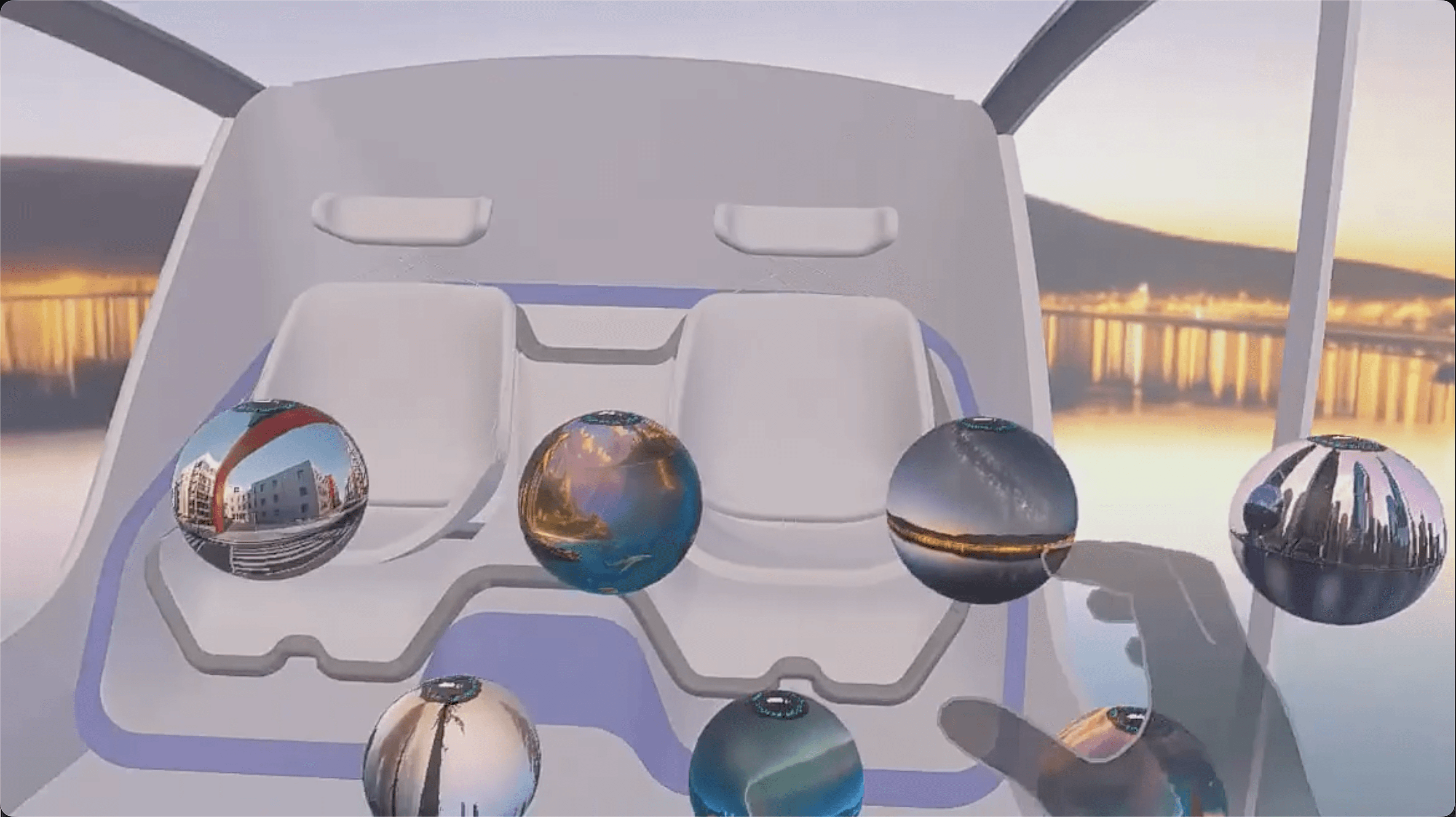

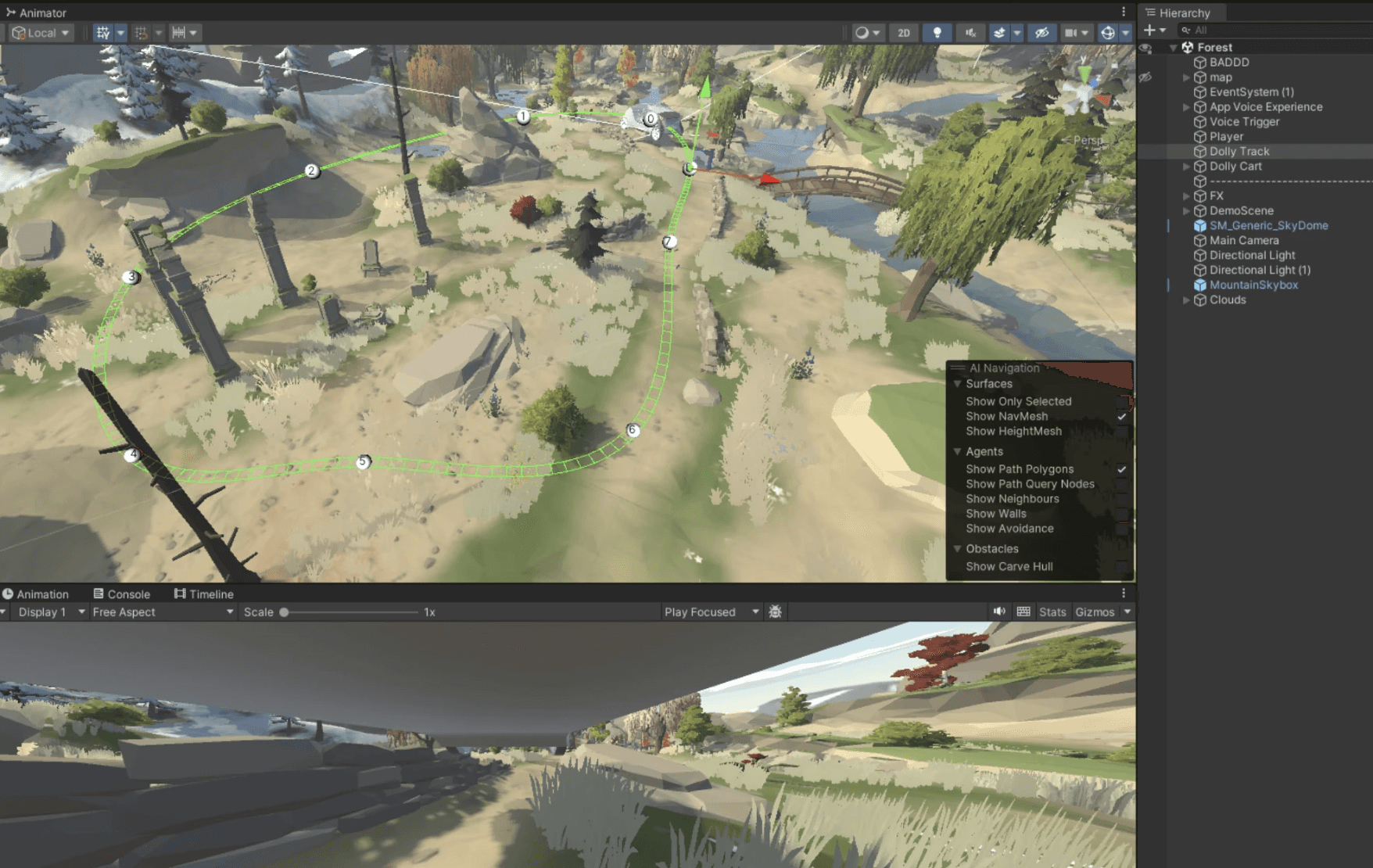

Instead of just changing the image on the sphere, why not simulate the car driving through a real-time, dynamic environment, so as to create a realistic sense of feeling in the virtual world?

We created a giant sphere and put the car inside it. When the user grabs the small ball in front of them, the giant sphere outside the car changes to show the selected environment.

Final Video

Moving Forward

Future iterations of this system could expand the immersive experience through adaptive environments, personalized AR layers, and multi-passenger interaction logic. By incorporating learning-based preferences, richer environmental data, and expanded gesture and voice vocabularies, the vehicle could evolve into a responsive spatial interface that adapts to each passenger’s needs—transforming autonomous travel into an engaging, intuitive, and customizable experience.